In this post, I will share some of what I learned about Artificial Intelligence and ChatGPT during the past couple of weeks. I'll discuss Natural Language Processors, token similarity, and present examples that show how to complete a statement and how to translate a sentence from French to English. Finally, I will show a preview of my first chatbot written in C#.

Natural Language Processors

ChatGPT is a Natural Language Processor (NLP): a type of Artificial Intelligence that helps computers understand and interpret human language. NLPs are commonly used to create chatbots, allowing them to generate human-like responses to user input.

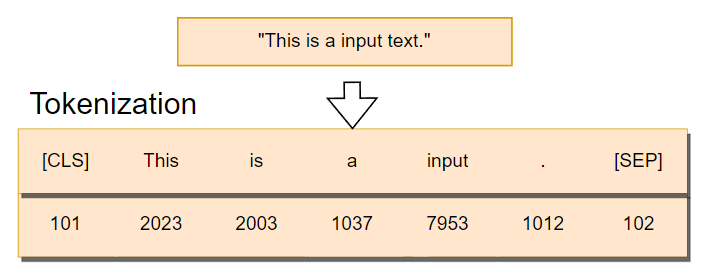

To do all this, the NLP first breaks down the user's text into individual words or phrases, known as "tokens" as in the following diagram (source).

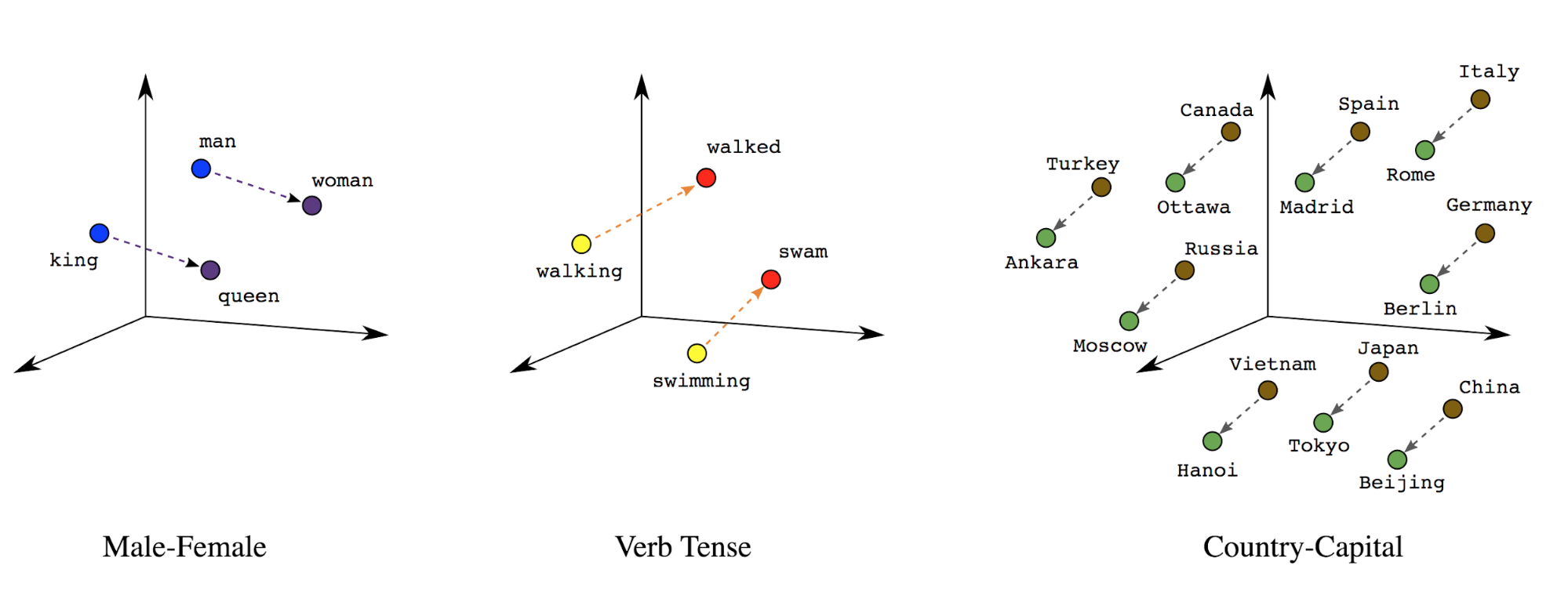

These tokens are then converted into mathematical representations called "vectors," which allow the AI model to analyze and compare them for similarity. The following diagram shows how this looks (source).

Cosine Similarity

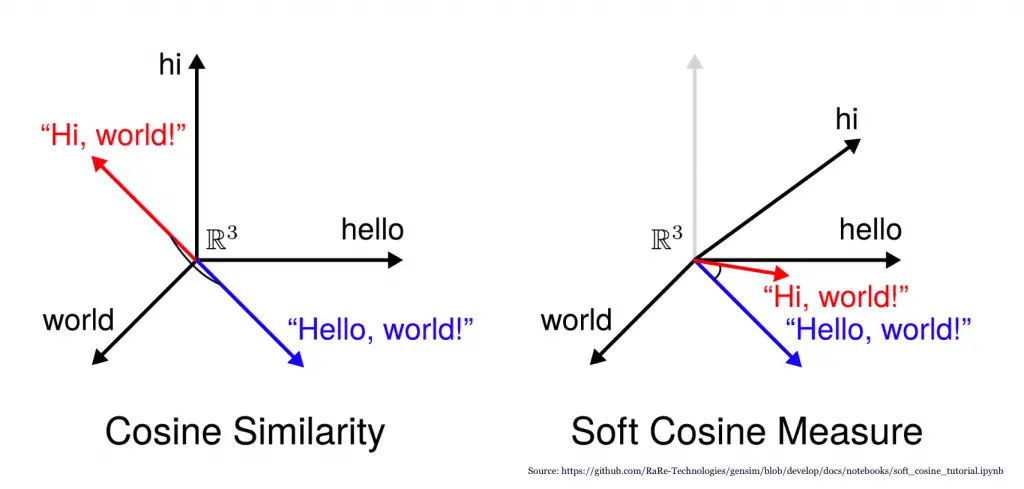

Cosine similarity is a measure used in NLP to compare the similarity between two vectors, with a value between -1 and 1 indicating the degree of similarity.

ChatGPT uses this measure to generate contextually relevant and grammatically correct predictions for the user's prompt by comparing it to a large database of text and using machine learning to improve accuracy (diagram source).

How is the response generated?

To generate a complete response from a vector representation of the similarity between tokens, ChatGPT uses a combination of natural language processing and machine learning techniques.

Natural Language Processing Techniques

- Tokenization: breaking down the text into individual words or phrases (tokens) for analysis.

- Part-of-Speech Tagging: identifying the part of speech of each token in a sentence.

- Named Entity Recognition: identifying and extracting named entities (such as people, places, and organizations) from the text.

- Sentiment Analysis: determining the sentiment or emotion conveyed in a piece of text.

Machine Learning Techniques

- Recurrent Neural Networks (RNNs): a type of neural network that can process sequential data, such as text, by maintaining a memory of previous inputs.

- Transformers: a type of neural network that uses self-attention mechanisms to process and analyze text, resulting in more accurate predictions.

- Gradient Boosting: a type of ensemble learning algorithm that combines multiple weak models to make more accurate predictions.

- Support Vector Machines (SVMs): a type of machine learning algorithm used for classification, that separates data into different classes using a hyperplane in high-dimensional space.

Overall, the combination of natural language processing and machine learning techniques enables NLP models to analyze and generate text in a more intelligent and human-like way. By leveraging both types of algorithms, NLP models can understand and interpret the meaning and context of a text, and generate responses that are more accurate and contextually relevant.

How do I write a basic chatbot?

Most of what was discussed so far is hidden from the developer thanks to the APIs of OpenAI, AzureAI, etc. but understanding how tokens work is important to write better code and manage costs.

When we write code to access NLPs we think in terms of "creating completions", "translating", and so on. The following examples should give an idea of the type of APIs we will be accessing.

Text Generation

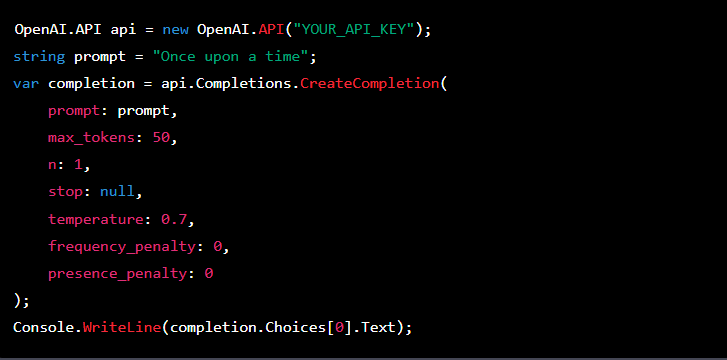

This example generates a text completion for the prompt "Once upon a time", using the OpenAI API.

The max_tokens parameter controls the maximum length of the generated text, while the temperature parameter controls the "creativity" of the text (higher values result in more unpredictable text).

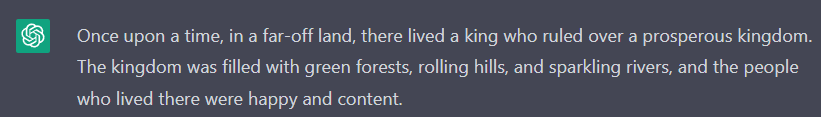

The answer would be similar to this:

This first example is the foundation of more complex examples like the ChatGPT client itself, which can be implemented as a loop that keeps doing what this example does, while at the same time "remembering" all the things that have been said since the beginning of the chat.

Language Translation

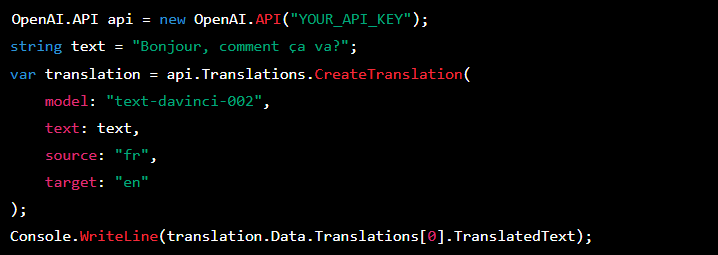

This example uses the OpenAI API to translate the text "Bonjour, comment ça va?" from French to English.

The model parameter specifies the translation model to use, while the source and target parameters specify the source and target languages for the translation. The resulting translation is outputted to the console.

The response will be "Hello how are you".

Next steps

In the next post I will show how I created my first ChatBot in C#, using what I learnt so far and the help of Microsoft Semantic Kernel.

For more information on Microsoft Semantic Kernel go here: